By Herbert Lin, Harold Trinkunas,

September 10, 2020

Almost since its first emergence, the spreading SARS-CoV-2 outbreak has also been accompanied by a widespread proliferation of misinformation and disinformation, what the World Health Organization (WHO) described as “a massive ‘infodemic’—an over-abundance of information … that makes it hard for people to find trustworthy sources and reliable guidance when they need it.”

Misinformation can be information that is false or inaccurate, whether deliberately or inadvertently so. Disinformation refers to information that is intended to mislead, whether or not the information is literally true. Misinformation and disinformation about COVID-19 fall into several categories, including:

- The biological origin and nature of the virus. One false claim is that the insinuation that the virus is the result of Chinese efforts to develop a biological weapon. Another is the false claim is that COVID-19, the disease, is caused not by a virus but by exposure to 5G wireless signals.

- Diagnoses and/or treatments for the disease. One false claim suggests that the ability to take a deep breath and hold it for more than 10 seconds without discomfort is a reliable indicator of being infection-free. A second false claim was that the FDA had approved chloroquine and hydroxychloroquine as treatments for Covid-19 at a time when it had not. A third false claim, made as early as February, asserted that “we are ‘very close’ to a vaccine.” A fourth: People with the disease get better “just by sitting around and even going to work.” An anti-vaccination disinformation effort based in California released a widely viewed 26-minute conspiracy theory video, “Plandemic,” that claimed that COVID-19 responses were a cover for forced mass vaccinations.

- Public measures to respond to the epidemic. One claim made on February 24 was that the coronavirus is very much under control in the United States. A second claim made on March 6 was that any person in the United States who wants a SARS-Cov-2 test can get one. There are also multiple examples of governments dissimulating about the extent to which COVID-19-related illnesses and deaths have affected their population, including Russia, China, and even the American state of Florida.

The combination of the psychology of pandemic—which causes societies to grasp at (mis)information in the midst of panic—and political polarization—which leads people to attribute partisan motivations to public health measures—have made the spread of false information about COVID-19 particularly problematic. This combination is compounded by a global information ecosystem that combines traditional and social media, interacting with human cognition in ways that accelerates the spread of misinformation. In addition, today’s global media environment makes possible the active spread of disinformation by states and other actors on an unprecedented scale to achieve geopolitical objectives or sow confusion among their adversaries, amplified by unwitting online communities predisposed to believe in and amplify false information about the pandemic. This combination has contributed to the virulent spread of disinformation and misinformation about the novel coronavirus on an unprecedented scale, and it challenges the capacity of states and public health authorities to develop, implement, and communicate scientific evidence-based responses to the COVID-19 pandemic.

Several steps will be necessary to combat misinformation and disinformation campaigns regarding COVID-19. First, private sector specialists in risk communications, advertising, linguistics, and social cognition could collaborate in devising accurate public health information that is targeted and crafted to overcome psychological mechanisms of resistance.

Second, governments could enact regulations that discourage microtargeting of social media advertisements and eliminate or circumscribe the freedom from liability currently enjoyed by platform and social media companies for the publication of information for which they might otherwise be held legally responsible. Government agencies that regulate medical product and device advertising also might expand their oversight to medical claims related to COVID-19.

Third, and perhaps most important, government leaders must respect factual information. It is clear that anti-intellectual populist leaders inclined to downplay and minimize the risk of the pandemic for political ends have contributed to the current infodemic in ways that are both inimical to democracy and dangerous to public health. In democracies, at least, voters can change their leaders.

The psychology of pandemic. The first widely reported indications of COVID-19 appeared late in December 2019, when the local government of Wuhan, China said that it was treating dozens of cases of pneumonia of unknown cause. In mid-January 2020, Chinese state media reported the first death from COVID-19, and the first cases appeared outside China in a number of nations, including the United States, Taiwan, Japan, Thailand, and South Korea. By the end of January, the World Health Organization had declared a global health emergency. By early July, the United States had confirmed over two million cases and over 125,000 deaths, more than any other country in the world.

In 1990, Philip Strong described three crucial elements of how human psychology responds as an epidemic unfolds, focusing in particular on epidemics involving novel and deadly disease. These elements are fear, explanation, and moralization, along with actions both taken and proposed. Any society involved in an epidemic, Strong wrote, is likely to “experience simultaneous waves of individual and collective panic, outbursts of interpretation as to why the disease has occurred, rashes of moral controversy, and plagues of competing control strategies, aimed either at containing the disease itself or else at controlling the further epidemics of fear and social dissolution.”

The COVID-19 pandemic has evoked all these responses:

- Fear. With a new disease (or disease vector), an individual is uncertain about how he or she might contract the disease, and the entire environment—people, animals, and inanimate objects—becomes potentially infectious. This is so even if only a small percentage of the population or objects in the environment are potentially contagious. Fear is an especially important factor among the worried well, who may not have any good reason to suspect they have the disease in question but who are nonetheless seek out medical attention “just in case.” Fear also underlies the stigmatization of those with the disease and those who are thought—even without evidence—to be infectious carriers.

- Explanation. If little is known about a disease, the population is likely to have a broad range of views about its significance—is it trivial or very important or somewhere in between? Views of the underlying causal factors also vary, and can range from the scientifically well-informed (e.g., the emergence of a new virus) to the supernatural (e.g., the plague as one of God’s punishments for violating a commandment). We already have seen examples of this latter belief among some Americans. In another example, Republican state lawmaker Stephanie Borowicz in Pennsylvania has introduced a resolution suggesting that the novel coronavirus is a “punishment inflicted upon us for our presumptuous sins” and calling upon on lawmakers to designate March 30, 2020 as a “A State Day of Humiliation, Fasting, and Prayer” in Pennsylvania.

- Proposed actions. Often, responses to new and unfamiliar epidemics span a large range, inevitably impinging on other deeply held values. Often, such actions are unprecedented, or at least unusual, and they deeply threaten the status quo of behavior and practice. For example, trade, business, and travel may be disrupted; compromises of personal privacy and liberty may be seriously advocated; health education or even health behaviors (e.g., wearing a mask in public, testing) may be mandated. Moreover, because epidemics are by definition times of societal disruption, they present opportunities for those wishing or working for societal change to pursue their advocacy and public messaging.

All these elements are magnified when the disease involved is new or strikes in a new way. A previously encountered disease—influenza, for example—will have induced the development and emergence of a variety of individual and societal strategies to deal with it, and the availability of such “scripts” provides citizens with ways to cope.[1] Without available scripts, those affected are free to generate a plethora of vastly different responses. Some will be more extreme than others, and all will compete for attention. In extreme cases, Strong notes that “contagious waves of panic rip unpredictably through both individuals and the body politic, disrupting all manner of everyday practices, undermining faith in conventional authority, feeding on themselves to produce further, more intense panic and collapse.”

In the end, moving past epidemic psychology requires the establishment of new routines and new knowledge that the society as a whole come to accept as reasonable.

Differential reactions to the infodemic in the United States: Republicans and Democrats. The response of the American public to the Covid-19 epidemic increasingly reflects the polarization seen around other important issues, with Democrats and Republicans responding to the present situation in very different ways. It would be entirely expected and unsurprising that Democrats and Republicans have different assessments for how well the president is managing the epidemic—as of July 2, 2020,[2] one respected poll found that 10.4 percent of Democrats and 81.2 percent of Republicans approved of the President’s handling of the COVID-19 outbreak.

But the partisan divide is reflected in other, more surprising ways. As early as March:

- 50 percent of Democrats, compared to about 40 percent of Republicans, said they were washing their hands more often because of the virus.[3]

- About eight percent of Democrats, compared to about three percent of Republicans, said they had changed their travel plans.[4]

- About 40 percent of Democrats, compared to 54 percent of Republicans, said they had not altered their daily routines because of the virus.[5]

- About 40 percent of Democrats, compared to 20 percent of Republicans, said they thought that the coronavirus poses an imminent threat to the nation.[6]

- About 63 percent of Democrats, compared to 33 percent of Republicans, said they were very or somewhat worried about contracting the virus, while 38 percent of Democrats, compared to 67 percent of Republicans, were not too worried or not worried at all.[7]

- About 43 percent of Democrats, compared to 12 percent of Republicans, said they were very concerned about a coronavirus epidemic here in the United States.[8]

- About 35 percent of Democrats, compared to 14 percent of Republicans, said that most Americans were not taking the risks seriously enough.[9] Similarly, 19 percent of Democrats and 40 percent of Republicans thought they were overreacting.

Polling in May and June 2020 show similar partisan gaps in Democrats and Republicans views as the pandemic accelerated in the United States:

- 82 percent of Democratic-leaning adults viewed the coronavirus outbreak as a major threat to the health of the US population, while 43 percent of Republican-leaning adults agreed.[10]

- 68 percent of Democrats supported mask wearing in public at all times, while 34 percent of Republicans agreed.[11]

A couple of stories help to illustrate the partisan divide. In mid-March, Jerry Falwell Jr., president of the evangelical Liberty University in Virginia, said that coverage of the coronavirus was the media’s next attempt, after impeachment and special counsel Robert Mueller’s investigation, to “get Trump.”[12] In late March, he invited students back to campus, saying that “I think we, in a way, are protecting the students by having them on campus together… Ninety-nine percent of them are not at the age to be at risk, and they don’t have conditions that put them at risk.”[13] This invitation was issued over objections from local officials and despite a warning from the head of Liberty University’s student health service that it had “lost the ability to corral this thing.”[14]

In May 2020, the Republican-controlled House of Representatives in Ohio objected to the scope and duration of the state Department of Health’s stay-at-home order and voted to require legislative approval for health orders that extended beyond 14 days and to lower the penalties for violating such orders to a misdemeanor and a small fine.[15] Ohio House Speaker Larry Householder did not wear a mask during the House session to debate the measure, saying he did not own one; most other Republicans also did not wear masks, while most Democrats did.

Liberals and conservatives do find common ground on one point—others (but not “we”) are using the epidemic for political purposes. The Nation ran a piece entitled “6 Ways Trump Is Exploiting This Pandemic For Political Gain,” claiming that “as the rest of the world scrambles to alleviate the effects of the coronavirus, President Trump is using it to advance his agenda.”[16] The co-host of Fox & Friends Weekend, Peter Hegseth, said the Democrats “are rooting for the coronavirus to spread. They’re rooting for it to grow. They’re rooting for the problem to get worse… They’re cheering … for a virus because they hate Donald Trump so much.”[17] Acting White House Chief of Staff Mick Mulvaney even said the media was stoking a coronavirus panic because they hope it will take down President Donald Trump.[18]

Today’s global information ecosystem and human cognition. Strong’s research highlights what we have always known—epidemics and pandemics have always caused widespread alarm, fear, and overreaction. Is the public reaction to COVID-19 simply more of the same?

No. Extrapolating from research in our recent book, we contend that today’s information ecosystem—global in nature and densely interconnected through a variety of social media and traditional news media—amplifies the significance of psychological vulnerabilities long present in all human beings. This ecosystem makes it much easier for people to reject rational and authoritative explanations for security threats and how we should respond; to believe in rumors and misinformation; and to be more vulnerable to deliberate misinformation campaigns. Today’s public reaction to COVID-19 is not merely more of the same, but one in which alarm, fear, and overreaction are greatly exacerbated by technology.

The architecture of the human mind has two systems for processing of information—a heuristic, intuitive system (often designated as System 1) and a more deliberate analytical system (often designated as System 2).[19] All humans engage in System 1 thought, and do so by default—System 1 is always on, it acts reflexively, and it can do so because it does not take account of all available information. All humans also have the capacity to engage in System 2 processing, which is rule-based, abstract, and linked to language and conscious thought and also operates more slowly.

Most important, System 1 processing works well in many situations of daily life because many situations of everyday life exhibit regularities that guide the individual to conclusions with minimal mental effort. On the other hand, because sometimes salient regularities are misleading, System 1 processing will result in erroneous conclusions that would have been caught (and suppressed) if System 2 processing had been involved.

Because individuals are capable of both System 1 and System 2 processing, one of the most interesting questions is the nature of the psychology underlying belief in misinformation or disinformation. If System 2 processing is indeed less likely to result in error, belief in misinformation or disinformation would be correlated with an individual’s failure to engage in System 2 processing.

A number of reasons could account for such failure. For example, motivated reasoning is the term used to describe the substantial body of evidence indicating that individuals are more critical of information that is unfavorable to their positions (i.e., they engage System 2 thinking) and they are less critical in evaluating favorable information.[20] Individuals are susceptible to confirmation bias—a preferential tendency to seek out information that is consistent with their beliefs and to ignore information that contradicts their beliefs.[21] Time pressure to process a given item of information also inhibits System 2 processing (because System 2 is time-intensive), leaving the default System 1 processing in control. System 1 processing is also less demanding of an individual’s cognitive resources, suggesting that individuals under cognitive load (i.e., individuals dividing their attention and cognitive resources among multiple tasks) will also find System 2 processing difficult. Empirical evidence supports both of these propositions.[22]

Social identity (e.g,, as a Trump supporter or as a Democrat) also has an important influence on an individual’s engagement or non-engagement with System 2 processing. The evidence suggests that individuals tend to adopt the views of the peer groups that are most important to them, even if the “objective” or “factual” information available to them contradicts those views.[23] Therefore, uncritical System 1 thinking is more often active in processing information consonant with the beliefs and attitudes of those groups, and critical and skeptical System 2 thinking is more often active in processing information that is dissonant. In other words, people are more willing to accept ideas as true because those in their peer groups believe them to be true, regardless of the actual truth or falsity of those ideas. These effects (that individuals tend to accept important group norms) are even more pronounced in an anonymous environment (such as that which characterizes much online interaction).[24]

Finally, emotions and motivations affect cognition. For example, individuals who are angry tend to rely more heavily on simple heuristic cues (suggestive of System 1 thinking) than those who are not angry.[25] Individuals are also likely to stereotype people (a form of System 1 thinking) when that stereotype is consistent with their desired impression of those people; conversely, when the stereotype is inconsistent with their desired impression, individuals tend to inhibit the use of this stereotype.[26] Negative emotions (such as those induced by the receipt of information incongruent with a person’s prior beliefs) can improve the ability of a person to reason logically, suggesting that individuals may be very good indeed at being critical of information that causes them distress—reinforcing the point made above about motivated reasoning.[27] Thus, a person with a liberal political orientation will likely be good at finding the logical flaws in a conservative policy but less able to find the logical flaws in a liberal policy; the reverse would be true as well.

These characteristics of human cognition are all apparent in Strong’s model of epidemic psychology. For example, System 1 is optimized to be sensitive to changes in the environment that might indicate danger—and fear, a basic emotion, is an instinctive response to such changes. Another key element of System 1, the availability heuristic, leads people to estimate the likelihood of an event based on how easy it is to recall similar events—when the information environment is filled with news and gossip about individual disease cases, people will overestimate the prevalence of the disease and act in accordance with that higher but incorrect estimate. Differences on what is responsible for the outbreak and what should be done about it are explainable, at least in part, by factors of social identity. Given the high degree of polarization between Republicans and Democrats on a host of controversial issues, it should not be surprising that identification with one or another of these groups is an important factor affecting one’s choice of among the myriad explanations of the disease outbreak and the equally numerous possible courses of action.

System 1 and System 2 thinking have been part of the human cognitive architecture for millennia. However, today’s information ecosystem has been shaped by technologies that are only a few decades old; remember that the World Wide Web has been in widespread use for only a quarter century or so. The natural cognitive processing capabilities of human beings have not changed in any meaningful way in that short time span.

These technologies provide high connectivity; low delays in information delivery; high degrees of anonymity; customized information searches; exposure to information based on internet browsing history, email content, or demographic details; insensitivity to distance and national borders; democratized access to publishing capabilities; and inexpensive production and consumption of information content. These aspects of modern information technologies enable purveyors of misinformation to use automated accounts to amplify messages of misinformation, to drown out authoritative and reliable information, to communicate with large populations at low cost without accountability, and to find sympathetic individuals with whom such information resonates no matter how outlandish the information in question—and who are themselves likely to further spread such information to many others.

The deluge of information and a chaotic information ecosystem leads to information overload on individuals, which in turn increases reliance on System 1 processes. Moreover, the affordances of social media are designed to take advantage of System 1 processing. Purveyors of misinformation use these affordances to conduct their cyber-enabled activities to keep people engaged with System 1 thinking.[28] It is this information ecosystem that has become known as the post-truth society.

False echoes in the information ecosystem. Although propaganda has been a tool of conflict for centuries, the intertwining of social media and traditional news media on a global scale has made propaganda highly cost-effective and ubiquitous in the digital realm. Authors of disinformation campaigns can propagate falsehood at ranges, speeds, and scales unachievable by previous purveyors of propaganda.

This reality came to the attention of the US public with the realization that the 2016 presidential campaign had been targeted by Russian information operations. But such information operations have been detected in over 40 countries as of 2018, and the number is surely higher today. These operations target not only elections, but are also used to advance diplomatic and military objectives, such as Russian campaigns against NATO.[29]

Both Russia and China have engaged in disinformation campaigns targeting the United States and other NATO nations, promoting the notion that the novel coronavirus originated in the United States.[30] A reasonably comprehensive inventory of disinformation themes regarding coronavirus was published by the European External Action Service (EEAS)—the European Union’s diplomatic service—in mid-March 2020. Some of the then-trending false narratives included:[31]

- The coronavirus is a biological weapon that has been deployed.

- The coronavirus did not break out in Wuhan, China—the US is concealing its true origin, which is in fact the US or US-owned laboratories across the world.

- The outbreak has been caused by migrants, and migrants are spreading the virus in the EU.

- The EU is not ready to provide urgent support to its member states—instead, they have to rely on external support, with China mentioned most often as the source of such assistance.

- China is coming to rescue the EU as Brussels abandons EU member states.

- The Schengen area no longer exists. Europeans are in quarantine and can’t move freely through the area, but migrants can.

- The coronavirus is a hoax; it does not exist.

- Natural remedies exist to cure the disease; descriptions of those alleged remedies are often combined with anti-vaccination narratives

Politico magazine discovered a particularly interesting disinformation item: Zvezda, a media company controlled by the Russian armed forces, ran a story saying that Bill Gates had advance knowledge of COVID-19.[32] The People’s Republic of China, meanwhile, has been highly active in casting doubts on the origins of the virus in Wuhan, and leading Chinese diplomats have been actively promoting an erroneous narrative that the novel coronavirus originated as a US bioweapon.[33] These disinformation campaigns—and many other Internet-based disinformation campaigns—are designed to take advantage of the mind hacking that the modern information ecosystem makes possible, placing people in an information-processing mode in which emotion-, identity-, and faith-based responses rather than reason prevail. It is the design of the technology that exacerbates people’s natural inclination to use System 1 processes, thus making it harder for people to choose to use System 2 processing.

It is important to note that deliberate disinformation campaigns do not have to do all the work themselves to promote false or damaging narratives. Much of the work of modern propaganda campaigns can be automated on Facebook and Twitter. Beyond this, modern campaigns craft messages that appeal to pre-existing beliefs and flood the zone with attractive but false narratives, relying on online activist communities of like-minded individuals to echo and amplify their message. Moreover, efforts to counter such campaigns run the risk of amplifying them further as fact-checking services refer to false narratives in order to rebut them, further spreading disinformation.

These campaigns are relatively cheap to operate and so are within the reach of mid-sized countries and reasonably well-funded private organizations. The Russian Internet Research Agency, famously tied to the 2016 disinformation campaign targeting the US presidential election, spent only a few million dollars on its effort, a sum that even civil society organizations or corporations would be able to afford. Such campaigns are conducted on social media platforms, provided and maintained, generally for free, by large technology companies. The platforms are designed to be used with very little training, lowering recruitment costs for disinformation operations.[34]

For their part, platform providers—the social media companies—have little incentive to address the mis- and disinformation problem. Platform providers rely on advertising for revenue. When users spend more time on a platform (dwell time), platform providers can show more ads and make more money.

Platform providers have found that users spend more time on their platforms when they are shown information that is consistent with and congenial to their pre-existing beliefs and ideas as opposed to information that challenges them. Research has also demonstrated that false information is more attractive to users, at least as measured by the greater speed and reach of such information as compared to true information.[35]

Responses to disinformation campaigns are also fraught. For the platform providers, the deliberate correction or suppression of disinformation not only means losing users who are put off by more reasoned or factual information that challenges their worldviews, but risks the platform companies being targeted by political activists accusing them of censorship. For the governments of Western nations, and the United States in particular, traditions and legal precedents protecting free speech generally regard any government action to inhibit expression with suspicion.

So what? Surely those making decisions don’t believe disinformation. Some might argue that disinformation campaigns are not important because those in positions of authority are surely too educated and rational to believe propaganda. On the contrary, leaders and educated people are just as likely, and in some cases more likely, to believe false narratives as the general population.[36] For example, the erroneous belief that climate change is unrelated to human activity is more likely to be shared by conservatives with higher degrees of education rather than those with less. Education does not require people to forego heuristic or system 1 thinking processes in favor of more analytical and deliberate thought on all issues—but it may well give them more effective tools with which to criticize information that they do not like.

Leaders now have easy access to information received from the global information ecosystem, and this is a relatively new phenomenon. During the 20th century, just as traditional media acted as gatekeepers for reliable and even-handed information delivered to the general public, intelligence agencies, alongside the media, served this purpose for leaders. But the democratization of information production and the rise of cyber-enabled disinformation campaigns means that leaders and populations alike now access and consume the same attractively packaged and appealing disinformation spread by adversaries.[37]

Indeed, as shown by populist leaders’ widespread attacks on traditional media and President Trump’s questioning of the US intelligence community and the so-called deep state, many leaders reject the role of traditional analytical and intelligence agencies in providing reliable information. As a result, some leaders are making decisions based on unvetted and possibly maliciously false information as they respond to crises such as the COVID-19 pandemic.

And now, leaders have even greater incentives to use disinformation campaigns for political, diplomatic and strategic advantage. As Kelly Greenhill argues, extra-factual information can be used both to rally domestic audiences and to signal commitment to courses of action to foreign states. Paradoxically, this signaling can either convince adversaries to back down (to avoid a conflict) or set off an escalatory spiral, as rival states engage in their own disinformation campaigns to signal their own commitment and steadfastness during a conflict.[38]

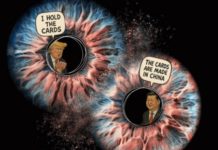

Currently, dueling Chinese and US narratives over the origins of the coronavirus are following such a pattern, with each side punishing onlookers who disagree with their preferred narrative. Chinese diplomats allude to the U.S. origins of the coronavirus on Twitter, while some US senators attribute the virus’s origins to Chinese laboratories. China has threatened economic retaliation against Australia for advocating for an official investigation into the origins of the novel coronavirus, and the United States has consistently raised obstacles to G-7 cooperation on pandemic response due to its allies’ unwillingness to call this the “Wuhan” virus, to stress its Chinese origin.[39]

There is also a risk that leaders will come to believe their own disinformation campaigns. Jeffrey Lewis documents the effects of “blowback” in the nuclear domain, in which countries come to believe their own propaganda. For example, Russian officials have openly expressed concerns that the launch systems for the missile interceptors in Poland could easily be converted to launch nuclear-armed land-attack cruise missiles.[40] Lewis argues that despite the fact that the United States has no plans to use its missile interceptor launch sites in such a manner, “the Russians have pushed this particular conspiracy theory so long—maybe cynically at first, to recreate the Euromissile crisis of the 1980s—that now they might very well believe it.” US officials still believe, again based on scant contemporary evidence, that Russia has a secret military doctrine of “escalate to de-escalate” involving the use of low yield nuclear weapons early in a military confrontation with NATO, even though this may well reflect a dated understanding of Russian nuclear strategy which has since evolved.[41] Each of these sets of beliefs likely originated in misinformation or at least motivated misunderstandings propagated by their own side.

Given the deployment of disinformation campaigns over COVID-19, it is not implausible to believe that statements made for strategic effect will come to be believed by a wider segment of the US leadership predisposed to accept this information because it agrees with long held beliefs. For example, there is a legitimate scientific debate over the origin of SARS-CoV-2. One of the theories proposed is that the virus escaped from the Wuhan Institute of Virology (WIV) via some mechanism that is as yet unknown.[42] Another theory is that the virus had natural origins, going from bat to some other species to human.[43] But the scientific work has not yet been done to ascertain which (if either) of these theories is true. For example, complete records of the WIV have not been made public. In the absence of such work, the origin of the virus will remain unknown.

China should be pressed to allow access to WIV records, and indeed the United States and others have asked China for such access.[44] But for the US Secretary of State to assert that the novel coronavirus originated in a Chinese laboratory[45] without acknowledging the scientific uncertainty about its origins does count as disinformation that misleads, because it expresses certainty when there is no such certainty. As for strategic effect, the idea that the virus “originated” in a laboratory conjures images of its being created there when in fact no such assertion has been made—the WIV was known to have been studying bat coronaviruses, and so release of the virus from the laboratory is the most reasonable meaning of “originate.” Furthermore, once the idea that the virus was “created” in the laboratory takes hold, it is only a very short step to the conclusion that it was created deliberately. All of these notions play to preconceived views of the China hawks.

Indeed, a Pew poll released in early April 2020 indicated that 23 percent of Americans said it was most likely that the novel coronavirus was deliberately created in a laboratory, while 6 percent said pointed to an accidental laboratory creation.[46] Further, Pew found that Republicans and Republican-leaning independents were more likely than Democrats and Democratic leaners to say the coronavirus was created in a lab (37 percent vs. 21 percent).

What can be done? Successful response to a pandemic requires millions of individual decisions to follow best health and sanitary practices, both for personal protection and to protect the “herd.” But today’s information ecosystem makes it harder now to propagate good behavioral practices as compared to pre-internet days. Many actors in the new information ecosystem erode the credibility of authoritative sources of information, especially when leaders fall prey to disinformation and misinformation. Indeed, some of these leaders even propagate disinformation and disinformation deliberately for political or diplomatic ends. In addition, the openness of the present system to misuse makes targeting disinformation campaigns at both the public and at leaders a low-cost, low-risk proposition, especially when those looking to sow confusion can co-opt unwitting accomplices to help them. And the social media companies that provide the platforms on which these campaigns are waged have advertising-based business models that reward “engagement” and disincentivize attempts to limit misinformation flows or risk the accusation of censorship by political activists.

So what is to be done?

The easiest “to-do” involves the dissemination of accurate, science-based information via—somewhat paradoxically—methods that are designed to appeal to System 1 thinking. In the case of the current pandemic, for instance, different audiences could receive the same science-based public health information, but the message framing would vary, depending on the nature of the audience—no one-size-fits-all campaign will be as effective as multiple tailored campaigns. Such campaigns will have to involve close collaborations among specialists in risk communications, advertising, linguistics, and social cognition, so messages can be formulated in ways that are less likely to engage psychological mechanisms of resistance.

Tailored information campaigns are the easiest defense against mis- and disinformation because they can be undertaken without government involvement. The other “to-do” items listed below require politically difficult actions that are unlikely to be taken today in the United States and probably in other democracies as well.

Regulation could force platform and social media companies to alter their business models by discouraging microtargeting of advertisements (which are nothing more than information-containing messages that some party pays to have presented). The key question in restrictions on microtargeting is the size of the audience that is targeted to receive advertisements. The goal today of companies reliant on advertising is ever-smaller audiences customized and designed for optimal receptivity to the content of a specific message. But as the audience size goes down, so do the opportunities for critique and the presentation of opposing or countering views, and accountability for irresponsible, divisive content is reduced. Regulation could specify a minimum size of audience below which targeting was forbidden.

Regulation could eliminate or circumscribe the freedom from liability currently enjoyed by platform and social media companies for publication of information for which they might otherwise be held legally responsible. Many proposals have been advanced to modify these protections—provided in the United States primarily by Section 230 of the Communications Decency Act—though only one, relating to sex trafficking, has made it into law.

Another to-do would involve actions that increase costs and risks for actors engaged in disinformation campaigns that create health risks for the public during the pandemic. Both the US Food and Drug Administration and the Federal Trade Commission have some authority over advertisements for medical products and devices, and it may be worth exploring the possibility of extending their jurisdiction over medical claims related to COVID. However, under First Amendment jurisprudence and history, any possible regulation would have to be drawn carefully to avoid infringement on First Amendment protections.

As for foreign actors engaged in such disinformation campaigns, a variety of mechanisms and paths for cost imposition are available, ranging from legal or financial sanctions on the individuals involved to outright cyberattacks against them. The downside of imposing costs using any of these methods risks escalation and retaliation against innocent US citizens. Moreover, it draws the United States into a game of whack-a-mole, and since there are potentially a large number of foreign actors who may wish to conduct such campaigns, it is not clear that cost imposition is a viable strategy that will significantly reduce harmful disinformation.

A fourth to-do is to restore the vetting, gatekeeper function of governmental intelligence communities. Of course, leaders would have to be willing to trust that gatekeeper function, but even leaders willing to do so might have an interest in a channel that would provide unfiltered information straight from their sources, so to speak. President Obama personally read letters from the public, though they were selected by his staff, and it is hard to imagine that he did not use Internet search engines from time to time.[47] But it could be useful for leaders to have at their sides people who knew how to interpret unvetted information flowing in—and specifically how such information might have been intended to manipulate public or leadership opinion. It might also make sense for intelligence communities to report on disinformation efforts that target national leaders; at the very least, such attempts should be made known to the leaders and to the public.

Finally, and perhaps most important, democracies need leaders who respect factual information. It is no accident that the democracies with the worst performance during the COVID-19 pandemic are the United States, the United Kingdom, and Brazil–all led by anti-intellectual populists inclined to minimize risk of the pandemic for political ends. To improve the effort against the pandemic, voters will have to replace such leaders with those willing to follow the best science-based policy advice.

Notes

[1] Robert Abelson, “Psychological status of the script concept,” American Psychologist 36(7):715–729, https://doi-org.stanford.idm.oclc.org/10.1037/0003-066X.36.7.715.

[2] https://projects.fivethirtyeight.com/coronavirus-polls/

[3] https://www.reuters.com/article/us-health-coronavirus-usa-polarization/americans-divided-on-party-lines-over-risk-from-coronavirus-reuters-ipsos-poll-idUSKBN20T2O3

[4] https://www.reuters.com/article/us-health-coronavirus-usa-polarization/americans-divided-on-party-lines-over-risk-from-coronavirus-reuters-ipsos-poll-idUSKBN20T2O3

[5] https://www.reuters.com/article/us-health-coronavirus-usa-polarization/americans-divided-on-party-lines-over-risk-from-coronavirus-reuters-ipsos-poll-idUSKBN20T2O3

[6] https://www.reuters.com/article/us-health-coronavirus-usa-polarization/americans-divided-on-party-lines-over-risk-from-coronavirus-reuters-ipsos-poll-idUSKBN20T2O3

[7] https://d25d2506sfb94s.cloudfront.net/cumulus_uploads/document/3rdraw493c/econTabReport.pdf

[8] https://d25d2506sfb94s.cloudfront.net/cumulus_uploads/document/3rdraw493c/econTabReport.pdf

[9] https://d25d2506sfb94s.cloudfront.net/cumulus_uploads/document/3rdraw493c/econTabReport.pdf

[10] Cary Funk and Alec Tyson, “Partisan Differences Over the Pandemic Response Are Growing,” Pew Research Center Science & Society (blog), June 3, 2020, https://www.pewresearch.org/science/2020/06/03/partisan-differences-over-the-pandemic-response-are-growing/; Pew Research Center, “Republicans, Democrats Move Even Further Apart in Coronavirus Concerns,” Pew Research Center – U.S. Politics & Policy (blog), June 25, 2020, https://www.pewresearch.org/politics/2020/06/25/republicans-democrats-move-even-further-apart-in-coronavirus-concerns/.

[11] Margaret Talev, “Axios-Ipsos Poll: Partisanship Is Main Driver of Behavior around Virus,” Axios, accessed July 7, 2020, https://www.axios.com/axios-ipsos-poll-coronavirus-index-15-weeks-e4eb53cc-9bc8-4cac-8285-07e5e5ef6b2b.html.

[12] https://www.mediaite.com/tv/jerry-falwell-jr-suggests-coronavirus-is-a-north-korean-bioweapon-attack-on-u-s/.

[13] https://www.foxnews.com/us/liberty-university-students-want-to-be-here-despite-coronavirus-pandemic-falwell-says.

[14] https://www.nytimes.com/2020/03/29/us/politics/coronavirus-liberty-university-falwell.html.

[15] https://www.dispatch.com/news/20200506/unhappy-with-dr-amy-acton-ohio-house-moves-to-limit-her-power-dewine-blasts-vote

[16] https://www.thenation.com/article/politics/coronavirus-trump-immigration-election/

[17] https://www.mediamatters.org/coronavirus-covid-19/foxs-pete-hegseth-democrats-and-media-are-rooting-coronavirus-spread

[18] https://www.bbc.com/news/world-us-canada-51667670

[19] For a primer on System 1 and System 2 thinking, see Daniel Kahneman, Thinking, Fast and Slow, Farrar, Straus & Giroux, 2011; Richard Petty & John Cacioppo, “The Elaboration Likelihood Model of Persuasion,” Advances in Experimental Social Psychology 19:123-205, 1986. For another variant of dual-system cognitive theory, see Shelly Chaiken, “The heuristic model of persuasion,”, in Mark Zanna, James Olson, and C.P. Herman (eds.), Ontario symposium on personality and social psychology. Social influence: The Ontario symposium, 5:3-39, 1987, Lawrence Erlbaum Associates, Inc. For a contrary view on dual-system cognitive theory, see Arie W. Kruglanski and Erik P. Thompson, “Persuasion by a Single Route: A View from the Unimodel,” Psychological Inquiry 10(2):83-109, 1999. All of the claims made in this paragraph are derived from the first three references in this footnote.

[20] Charles S. Taber & Milton Lodge, Motivated Skepticism in the Evaluation of Political Beliefs, American Journal of Political Science 50(3):755-769, July 2006.

[21] Raymond S. Nickerson, “Confirmation bias: A ubiquitous phenomenon in many guises,” Review of General Psychology,2(2):175-220, 1998.

[22] See, for example, Bence Bago, David Rand, and Gordon Pennycook, “Fake news, fast and slow: Deliberation reduces belief in false (but not true) news headlines,” Journal of Experimental Psychology: General, 2020, http://dx.doi.org/10.1037/xge0000729.

[23] The classic experiments along these lines (“conformity experiments”) were performed by Solomon E. Asch in the early 1950’s. See, for example, Solomon E. Asch, “Effects of Group Pressure Upon the Modification and Distortion of Judgments” in Groups, Leadership, and Men, Harold Guetzkow (ed.), Carnegie Press, pages 177-190, 1951.

[24] Tom Postmes et al., “Social Influence in Computer-Mediated Communication: The Effects of Anonymity on Group Behavior,” Personality & Social Psychology Bulletin. 27(10):1243–1252, 2001.

[25] Galen Bodenhausen et al, Negative affect and social judgment: the differential impact of anger and sadness, European Journal of Social Psychology 24:45-62, 1994.

[26] Ziva Kunda and Lisa Sinclair, “Motivated Reasoning With Stereotypes: Activation, Application, and Inhibition,” Psychological Inquiry 10(1):12-22, 1999.

[27] See Vinod Goel and Oshin Vartanian, “Negative emotions can attenuate the influence of beliefs on logical reasoning,” Cognition & Emotion 25(1):121-131, 2011, http://www.yorku.ca/vgoel/reprints/GoelVartanian_C&E.pdf.

[28] A more elaborated description of the psychological mechanisms at work in the use of cyber-enabled IW/IO can be found at Herbert Lin and Jacyln Kerr, “On Cyber-Enabled Information/Influence Warfare and Manipulation”, Stanford University, August 13, 2017, https://papers.ssrn.com/sol3/papers.cfm?abstract_id=3015680.

[29] See, for example, Kate Starbird, “Information Operations and Online Activism within NATO Discourse,” in Three Tweets to Midnight: Effects of the Global Information Ecosystem on the Risk of Nuclear Conflict, Harold Trinkunas, Herbert Lin, and Benjamin Loekre (eds.), Hoover Institution Press, Stanford, California, 2020.

[30] Julian E. Barnes, Matthew Rosenberg, and Edward Wong, “As Virus Spreads, China and Russia See Openings for Disinformation,” The New York Times, March 28, 2020, sec. U.S., https://www.nytimes.com/2020/03/28/us/politics/china-russia-coronavirus-disinformation.html; Jessica Brandt and Torrey Taussig, “The Kremlin’s Disinformation Playbook Goes to Beijing,” Brookings (blog), May 19, 2020, https://www.brookings.edu/blog/order-from-chaos/2020/05/19/the-kremlins-disinformation-playbook-goes-to-beijing/.

[31] EEAS Special Report, Disinformation on the Coronavirus – Short Assessment of the Information Environment, March 19, 2020, https://euvsdisinfo.eu/eeas-special-report-disinformation-on-the-coronavirus-short-assessment-of-the-information-environment/. U.S. sources asserting Russian attempts to spread disinformation include Tony Room, “State Department blames ‘swarms of online, false personas’ from Russia for wave of coronavirus misinformation online”, Washington Post, March 5, 2020, https://www.washingtonpost.com/technology/2020/03/05/state-department-face-fresh-questions-senate-about-coronavirus-misinformation-online/; Julian E. Barnes, Matthew Rosenberg, and Edward Wong, “As Virus Spreads, China and Russia See Openings for Disinformation,” The New York Times, March 28, 2020, sec. U.S., https://www.nytimes.com/2020/03/28/us/politics/china-russia-coronavirus-disinformation.html; and Jessica Brandt and Torrey Taussig, “The Kremlin’s Disinformation Playbook Goes to Beijing,” Brookings (blog), May 19, 2020, https://www.brookings.edu/blog/order-from-chaos/2020/05/19/the-kremlins-disinformation-playbook-goes-to-beijing/.

[32] See “Bill Gates, a secret laboratory and a conspiracy of pharmaceutical companies: who can benefit from coronavirus” can be found at https://tvzvezda.ru/news/vstrane_i_mire/content/202023353-O9wUV.html. The Politico article is Betsy Woodruff Swan, “State report: Russian, Chinese and Iranian disinformation narratives echo one another”, POLITCO, April 21, 2020, https://www.politico.com/news/2020/04/21/russia-china-iran-disinformation-coronavirus-state-department-193107.

[33] Laura Rosenberger, “China’s Coronavirus Information Offensive,” June 4, 2020, http://www.foreignaffairs.com/articles/china/2020-04-22/chinas-coronavirus-information-offensive.

[34] Mark Kumleben and Samuel C. Wooley, “Gaming Communication on the Global Stage: Social Media Disinformation in Crisis Situations,” in Effects of the Global Information Ecosystem on the Risk of Nuclear Conflict, ed. Harold A. Trinkunas, Herbert S. Lin, and Ben Loehrke (Stanford, Calif: Hoover Institution Press, 2020).

[35] Soroush Vosoughi, Deb Roy, Sinan Aral, “The spread of true and false news online,” Science 359(6380):1146-1151, 09 Mar 2018, DOI: 10.1126/science.aap9559, https://science-sciencemag-org.stanford.idm.oclc.org/content/359/6380/1146.

[36] Jeffrey Lewis, “Bum Dope, Blowback and the Bomb: The Effect of Bad Information on Policy-Maker Beliefs and Crisis Stability,” in Effects of the Global Information Ecosystem on the Risk of Nuclear Conflict, ed. Harold A. Trinkunas, Herbert S. Lin, and Ben Loehrke (Stanford, Calif: Hoover Institution Press, 2020).

[37] Harold A. Trinkunas, Herbert S. Lin, and Ben Loehrke, eds., “What Can Be Done to Minimize the Effects of the Global Information Ecosystem on the Risk of Nuclear War?,” in Effects of the Global Information Ecosystem on the Risk of Nuclear Conflict (Stanford, Calif: Hoover Institution Press, 2020), 193–214.

[38] Kelly Greenhill, “Of Wars and Rumors of Wars: Extra-Factual Information and (In)Advertent Escalation,” in Effects of the Global Information Ecosystem on the Risk of Nuclear Conflict, ed. Harold A. Trinkunas, Herbert S. Lin, and Ben Loehrke (Stanford, Calif: Hoover Institution Press, 2020).

[39] Associated Press, “Pompeo, G-7 Foreign Ministers Spar over ‘Wuhan Virus,’” POLITICO, March 25, 2020, https://www.politico.com/news/2020/03/25/mike-pompeo-g7-coronavirus-149425.

[40] Indeed, loading the launch systems with a nuclear-armed Tomahawk cruise missile would take essentially no conversion effort at all. See https://thebulletin.org/2019/02/russia-may-have-violated-the-inf-treaty-heres-how-the-united-states-appears-to-have-done-the-same/,

[41] Lewis, “Bum Dope, Blowback and the Bomb: The Effect of Bad Information on Policy-Maker Beliefs and Crisis Stability.”

[42] Milton Leitenberg, “Did the SARS-CoV-2 virus arise from a bat coronavirus research program in a Chinese laboratory? Very possibly,” Bulletin of the Atomic Scientists, June 4, 2020, https://thebulletin.org/2020/06/did-the-sars-cov-2-virus-arise-from-a-bat-coronavirus-research-program-in-a-chinese-laboratory-very-possibly/.

[43] Kristian G. Andersen et al., “The proximal origin of SARS-CoV-2” Nature Medicine 26: 450-452, 17 March 2020), https://www.nature.com/articles/s41591-020-0820-9.

[44] Secretary Michael R. Pompeo At a Press Availability, Remarks to the Press, April 29, 2020, https://www.state.gov/secretary-michael-r-pompeo-at-a-press-availability-4/; Laura Taylor, “Germany Asks China to “Clarify the Origin” of The Coronavirus,” EuroWeeklyNews, May 4, 2020, https://www.euroweeklynews.com/2020/05/04/germany-asks-china-to-clarify-the-origin-of-the-coronavirus/.

[45] Interview of Secretary Michael R. Pompeo With Martha Raddatz of ABC’s This Week with George Stephanopoulos, May 3, 2020, https://www.state.gov/secretary-michael-r-pompeo-with-martha-raddatz-of-abcs-this-week-with-george-stephanopoulos/.

[46] https://www.pewresearch.org/fact-tank/2020/04/08/nearly-three-in-ten-americans-believe-covid-19-was-made-in-a-lab/

[47] https://www.nytimes.com/2016/07/03/us/politics/obama-after-dark-the-precious-hours-alone.html

Published at thebulletin.org/